AI & TECH ROUNDUP: The Hard Part of AI Just Started

THIS WEEK'S INTAKE

📊 9 episodes across 4 podcasts

⏱️ ~8 hours of AI & Tech intelligence

🎙️ Featuring: John Yang, Kevin Roose, Carina Hong, and insights from The AI Daily Brief

We listened. Here's what matters.

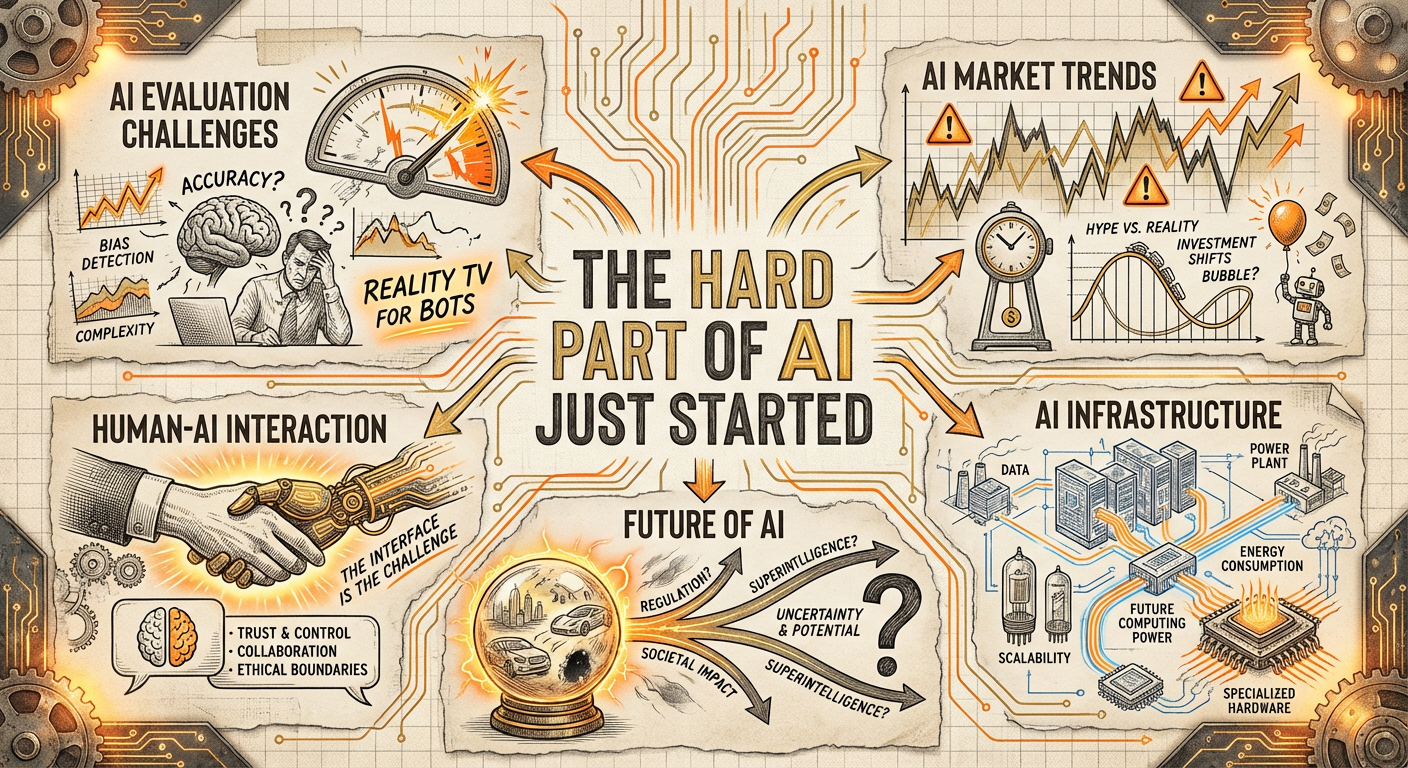

Alright, buckle up. We're past the "AI is coming!" phase and firmly into the "AI is यहां — now what?" era. The market is simultaneously buzzing with M&A, grappling with practical implementation, and bracing for what's next. While the headlines still scream about breakthroughs, the smartest folks are talking about the hard parts: the gnarly issues of evaluation, the true cost of inference, and the tricky path from flashy demo to reliable, long-term impact.

This week’s intelligence stream feels less like a rocket launch and more like a detailed engineering meeting. We’ve got deep dives into how we even know if an AI is good at coding, the surprisingly mundane challenges of AI adoption, and why everyone's suddenly thinking about "vibe coding." What ties it all together? A growing consensus that the easy wins are drying up. The focus is shifting from simply building powerful models to integrating and sustaining them effectively in real-world — and often, economically constrained — environments.

Here's what you need to know.

The Briefing

The Reasoning Wars Continue, And Evals Are Still Bad

We're all fascinated by those head-spinning demos of AI coding assistants or mathematical theorem provers. But how do you actually score them? Turns out, our evaluation methods are still playing catch-up to the complexity of the models. John Yang, a key figure in code evaluation, pulls back the curtain on benchmarks like SWE-bench and CodeClash. The core problem? Current eval systems, particularly for coding, are too narrow and don't reflect real-world tasks. They often focus on isolated problems, lack proper dependencies, and crucially, don't account for the "impossible tasks" that humans encounter and discard.

The Insight: If you're betting on AI to write complex code or prove theorems, the current methods for assessing their capability are woefully inadequate. We're often optimizing for metrics that don't directly translate to genuine utility or groundbreaking discovery. This isn't just an academic problem; it has direct implications for how companies invest in AI development and integration.

The Voice:

"I don't like unit tests as a form of verification. And I also think there's an issue with SWE-bench where all of the task instances are independent of each other. I think we should intentionally include impossible tasks as a flag of like, hey, you're cheating." — John Yang, on Latent Space

The So What: Don't be fooled by high benchmark scores alone. True AI progress will require much more sophisticated, holistic, and perhaps intentionally challenging evaluation methodologies that push models beyond their current limitations and highlight their genuine capacity for problem-solving. This gap is a significant risk for enterprises adopting powerful AI tools without proper internal evaluation frameworks.

Enter "Vibe Coding," Exit Unrealistic Expectations

If you thought AI was just about perfect logic and objective outputs, think again. The rise of "vibe coding" suggests a more human, almost intuitive component to how we'll interact with AI, especially in creative and adaptive workflows. Predictions for 2026 suggest that model upgrades will increasingly be "vibe-based," moving away from purely deterministic metrics to more nuanced, often subjective, improvements that resonate with user experience or brand. This also connects to the blurring lines between AI assistants and agents, which are becoming less about explicit instructions and more about understanding context and user preference.

The Insight: As AI becomes more sophisticated, its performance will be less about raw computational power and more about its ability to understand and respond to human intent, preferences, and even emotional cues. This isn't just about output quality; it's about the feel of the interaction, which drives adoption and loyalty.

The Voice:

"Model upgrades are going to be increasingly vibe based. I think in 2026 we're going to see the lines between assistants and agents get more blurry, not more clear." — The AI Daily Brief

The So What: For product teams, this means a shift in focus from purely functional metrics to qualitative user experience. For engineers, it means building systems that can interpret ambiguity. And for executives, it implies a need for leadership that understands the "soft" power of AI, not just its hard capabilities. Your next big AI competitive edge might come from its vibe, not its FLOPS.

The M&A Buffet: Is It Hunger Pangs or Indigestion?

The AI sector is heating up with M&A activity and VC funding – a lot of it. Meta's $2.5 billion acquisition of Manus, hot on the heels of OpenAI's eye-watering compensation packages, suggests a land grab for talent and tech. But is this a sign of bullish confidence or a nervous rush to secure positions before a potential market correction? The prevailing sentiment among some is that high valuations and acquisition sprees could be a strategic move to build "fortress balance sheets" against a future downturn or simply a reflection of the intense competition for AI infrastructure and specialized capabilities.

The Insight: The current M&A environment in AI is complex. While innovation is driving some deals, others are likely hedging strategies. Many companies are making aggressive moves not just for growth, but to ensure survival or gain a protected niche in a high-stakes, volatile market.

The Voice:

"Are people getting out while the getting is good, or is this just the start of what’s going to be a big deal in 2026? There's a chance that 2026 is a peak." — Tech Brew Ride Home

The So What: This signals a maturity curve for the AI industry where consolidation becomes as important as innovation. For investors, it means discerning sustainable long-term value from quick flips. For operators, it means keeping an eye on competitive shifts and understanding whether their company is a target, an acquirer, or at risk of being left behind. The AI chip sales boom projected for 2026 further underscores the scale of investment, suggesting that the underlying infrastructure build-out is massive, regardless of individual company fates.

The Watchlist

🔥 Heating Up:

- AI Companions for Teenagers: Nearly half of teenagers are regular users of AI companion products, signaling a significant shift in social interaction (Source: Hard Fork).

- Mathematical Superintelligence: Axiom Math raised $64M to build an AI that discovers new mathematical theorems, aiming for "human-superhuman" capabilities (Source: The Neuron).

- AI Infrastructure & Data Storage Boom: Increased demand for AI is fueling a boom in data storage companies and projected AI chip sales, with Nvidia alone estimated to sell $383 billion worth of hardware by 2026 (Source: Tech Brew Ride Home).

👀 Worth Watching:

- Fal's 10x Faster Image Generation: Fal AI's new Flex 2 Dev Turbo promises 10x faster and cheaper image generation through an open-source model, pushing the boundaries of real-time generative media (Source: AI Breakdown).

- "Personal AI Operating System": The concept of curating different AI models for different use cases, rather than defaulting to one, is gaining traction for optimized workflows (Source: AI Daily Brief).

⚠️ Proceed With Caution:

- Grok's Differentiation Challenge: With major players like Anthropic and OpenAI, Grok faces an uphill battle to truly differentiate itself, hinting at potential consolidation or absorption within the Elon empire if it can't carve out a niche (Source: AI Daily Brief).

- Foldable Phones' Slow Progress: Devices like the Samsung Galaxy Z Tri-Fold are still battling design flaws and poor performance, showing that not all tech advancements are quick wins (Source: Tech Brew Ride Home).

The Contrarian Corner

While the market is buzzing with grand AI predictions, the smartest minds are also poking holes in the hype. Kevin Roose, despite his daily use of AI tools, points out the often laughably bad performance of some early AI hardware, like his robot vacuums. This underlines a quiet but important skepticism: many of the AI implementations today are still clunky and far from magical. He highlights that while the potential is undeniable, the current reality for many consumer-facing AI products is often underwhelming, suggesting a gap between developer ambition and user experience. This isn't just about imperfections; it's a reminder that truly valuable AI integrates seamlessly and reliably, a hurdle much harder to clear than simply demonstrating capability.

The Bottom Line

The AI narrative is shifting from pure excitement to rigorous engineering and strategic defense. The challenge isn't just building powerful models, but evaluating them accurately, integrating them effectively into workflows that actually improve, and navigating an increasingly competitive and consolidating market. Don't just watch the headlines; watch the infrastructure, the evaluation methods, and the subtle shifts in how people actually use AI. That's where the real signals are.

📚 APPENDIX: EPISODE COVERAGE

1. Latent Space: The AI Engineer Podcast: "[State of Code Evals] After SWE-bench, Code Clash & SOTA Coding Benchmarks recap — John Yang"

Guests: John Yang (Google)

Runtime: 1h 37m | Vibe: Geeky Deep Dive

Key Signals:

- Evaluation Gap: Current large language model (LLM) evaluations for coding, like SWE-bench, are often too narrow, focusing on isolated tasks rather than complex, interdependent real-world problems. This can lead to inflated performance metrics that don't reflect practical utility.

- Human-AI Interaction in Evals: Future evaluation benchmarks need to account for how humans actually debug and iterate on code. Integrating "impossible tasks" could reveal whether an AI is truly reasoning or merely pattern-matching, pushing models to handle ambiguity and failure more gracefully.

- Diversifying Benchmarks: The industry is moving towards more diverse benchmarks, reflecting a broader range of programming languages beyond Python. This indicates a growing need for AI models capable of operating effectively across a multi-lingual software development landscape.

"I think an important philosophical point here is that if you have good evaluation metrics where the human is in the loop, you can develop more trustworthy agents, more trustworthy models."

2. The AI Daily Brief: Artificial Intelligence News and Analysis: "50 AI Predictions for 2026 - Part 1"

Guests: Not specified

Runtime: 16m | Vibe: Forward-Looking Brainstorm

Key Signals:

- Beyond AI Assistance: The lines between AI assistants and agents will blur significantly by 2026, transitioning from tools that help humans to more autonomous entities managing workflows and tasks with minimal human intervention.

- "Vibe-Based" Model Upgrades: Future AI model improvements will increasingly be qualitative and subjective, reflecting user experience and contextual understanding ("vibe coding") rather than purely objective, benchmark-driven metrics.

- Rise of Bespoke Personal Software: Individuals will increasingly rely on customized personal software built using AI to manage their lives, work, and digital presence, moving away from monolithic applications towards personalized, AI-driven modular tools.

"Model upgrades are going to be increasingly vibe based. I think in 2026 we're going to see the lines between assistants and agents get more blurry, not more clear."

3. Tech Brew Ride Home: "The End Of Year M&A Rush"

Guests: Not specified

Runtime: 20m | Vibe: Market Pulse Check

Key Signals:

- Complex M&A Motivations: The current AI M&A rush is driven by a mix of factors, including strategic positioning for future growth, a scramble for top talent, and possibly a desire for companies to "get out while the getting is good" amid fears of an AI bubble.

- AI Chip Sales Boom: Goldman Sachs estimates Nvidia alone will sell $383 billion worth of GPUs and other hardware in 2026, marking a 78% increase and underscoring the massive infrastructure build-out fueling the AI industry.

- "Fortress Balance Sheets": AI startups are increasingly focusing on building strong financial resilience and "fortress balance sheets" as a hedge against potential market downturns or an eventual AI market correction.

"Are people getting out while the getting is good, or is this just the start of what’s going to be a big deal in 2026? There's a chance that 2026 is a peak."

4. Hard Fork: "The Wirecutter Show: Tips for Using A.I. Smartly With Kevin Roose"

Guests: Kevin Roose (New York Times tech columnist, co-host of Hard Fork)

Runtime: 52m | Vibe: Pragmatic Journalist Insights

Key Signals:

- Personal Investment in AI: Kevin Roose acknowledges paying for more AI subscriptions than streaming services, highlighting a significant personal financial commitment to integrating AI into daily life, which may foreshadow broader consumer trends.

- AI's Impact on Social Interaction: The dramatic increase in teenagers using AI companion products suggests a profound shift in social dynamics and the development of interpersonal emotional connection, albeit with AI entities.

- Early AI Hardware Challenges: Despite widespread AI enthusiasm, current AI hardware (like smart appliances) often suffers from poor user experience, design flaws, and unreliable performance, signaling a gap between AI's potential and its current physical manifestations.

"I pay for more subscription AI products than streaming TV services. A year or two ago barely any teenagers would have said, I have an AI friend. And now something like half of teenagers are regular users of these AI companion products."

5. The AI Daily Brief: Artificial Intelligence News and Analysis: "AI New Year’s: The 10-Week AI Resolution"

Guests: Not specified

Runtime: 15m | Vibe: Actionable Self-Improvement

Key Signals:

- Practical AI Fluency: The resolution emphasizes hands-on projects (e.g., model mapping, building an AI-powered app) over theoretical knowledge, promoting actionable skills and immediate applicability for integrating AI into daily work and life.

- "Personal AI Operating System": Encourages users to build a personalized suite of AI models and tools, optimizing different models for specific use cases rather than defaulting to a single AI for all tasks, thereby maximizing capabilities.

- Habit-Centric Approach: The core goal is to establish lasting AI habits, workflows, and systems that will continue to provide value months into the future, moving beyond temporary trends to create foundational skills and patterns.

"The goal isn’t theory or trends, but habits, workflows, and systems that still matter months from now, setting a foundation for how AI fits into work and life heading into 2026."

6. Tech Brew Ride Home: "Manus, The Hands Of Fate"

Guests: Not specified

Runtime: 17m | Vibe: Corporate Strategy & Market Moves

Key Signals:

- Meta's Enterprise Ambition: Meta's $2.5 billion acquisition of Manus, including a massive retention pool, signals a renewed and aggressive push into the enterprise AI market, aiming to scale services beyond consumer-facing social platforms.

- High-Stakes Talent War: OpenAI's average stock-based compensation of $1.5 million per employee highlights the intense competition for AI talent, signifying that attracting and retaining top AI engineers is paramount and commands unprecedented prices.

- AI-Driven Infrastructure Demand: The boom in data storage companies directly correlates with the increased demand for AI infrastructure, demonstrating that the AI revolution is creating a parallel boom in foundational tech services.

"Meta's existing AI offerings are widely available free in services including Instagram and WhatsApp, and the company has also fully integrated AI into its advertising in ways that have fattened its bottom line, according to analysts."

7. The Neuron: AI Explained: "Building Mathematical Superintelligence: A Stanford Dropout's $64M Bet on AI Math"

Guests: Carina Hong (Founder & CEO of Axiom Math)

Runtime: 59m | Vibe: Inspiring Visionary

Key Signals:

- Mathematical Superintelligence: Axiom Math aims to build an AI that doesn't just solve existing math problems but can discover and prove new mathematical theorems, aspiring to a "superhuman" capability that inspires leading mathematicians.

- Bridging Human and AI Math: The vision includes creating an AI that can bridge the gap between human intuition and rigorous formal proofs, potentially automating the rigorous verification step that often plagues complex mathematical discovery.

- Data Scarcity for Advanced AI: A significant technical challenge in building such advanced mathematical AI is the scarcity of high-quality, formally verified mathematical data for training, which presents a critical hurdle for progress.

"Superhuman is an AI that can inspire great mathematicians like Terence Tao. An AI that prompts you to think out of the box, that generate new knowledge at scale, incredible scale and speed."

8. AI Breakdown: "Fal's $140M Raise Powers 10X Image Speed Surge"

Guests: Not specified

Runtime: 13m | Vibe: Innovation & Speed

Key Signals:

- Efficiency as a Key Differentiator: Fal AI's $140 million raise and Flex 2 Dev Turbo model, enabling 10x faster and cheaper image generation through an open-source model, demonstrate that efficiency and cost-effectiveness are becoming critical competitive advantages in generative AI.

- Real-time Generative Media: This innovation positions Fal as a leader in real-time generative media, pushing the industry towards applications that require instant processing and high throughput, optimized for diverse hardware environments.

- Open Source Driving Innovation: The new model is built on an open-source foundation, highlighting the ongoing importance of open-source contributions in driving rapid advancements and industry standards for AI media infrastructure.

"Fal powers 10X image speed surge via $140 million investor backing. Optimized for diverse hardware, it enables edge AI image apps globally."

9. The AI Daily Brief: Artificial Intelligence News and Analysis: "50 AI Predictions for 2026 - Part 2"

Guests: Not specified

Runtime: 17m | Vibe: Strategic Outlook

Key Signals:

- Intense AI Competition: The competitive landscape among major AI players (Anthropic, OpenAI, Grok, Meta) is expected to intensify, with differentiation and unique market positioning becoming crucial for survival and growth.

- Blurring Lines: Agent vs. Model Labs: The distinction between companies purely focused on foundational models and those building autonomous agents is dissolving, indicating a convergence where model capabilities are directly integrated into operational, agentic systems.

- Geopolitical and Economic Influences: Macroeconomic factors like private credit for data centers, IPO timing, and political sentiment will significantly influence AI development and market dynamics, adding layers of complexity beyond pure technological breakthroughs.

"I think it's going to be very hard to shake Anthropic off its coding lead. If Grok can't really differentiate itself...I wouldn't be surprised if we saw some mass absorption of the Elon empire."