AI & TECH ROUNDUP: The Absorption Challenge — From Hype to Hard Reality

THIS WEEK'S INTAKE

📊 11 episodes across 5 podcasts

⏱️ ~18 hours of AI & Tech intelligence

🎙️ Featuring: Mars CEO Poul Weihrauch, ElevenLabs Co-Founder Mati Staniszewski, Max Tegmark, Dean Ball

We listened. Here's what matters.

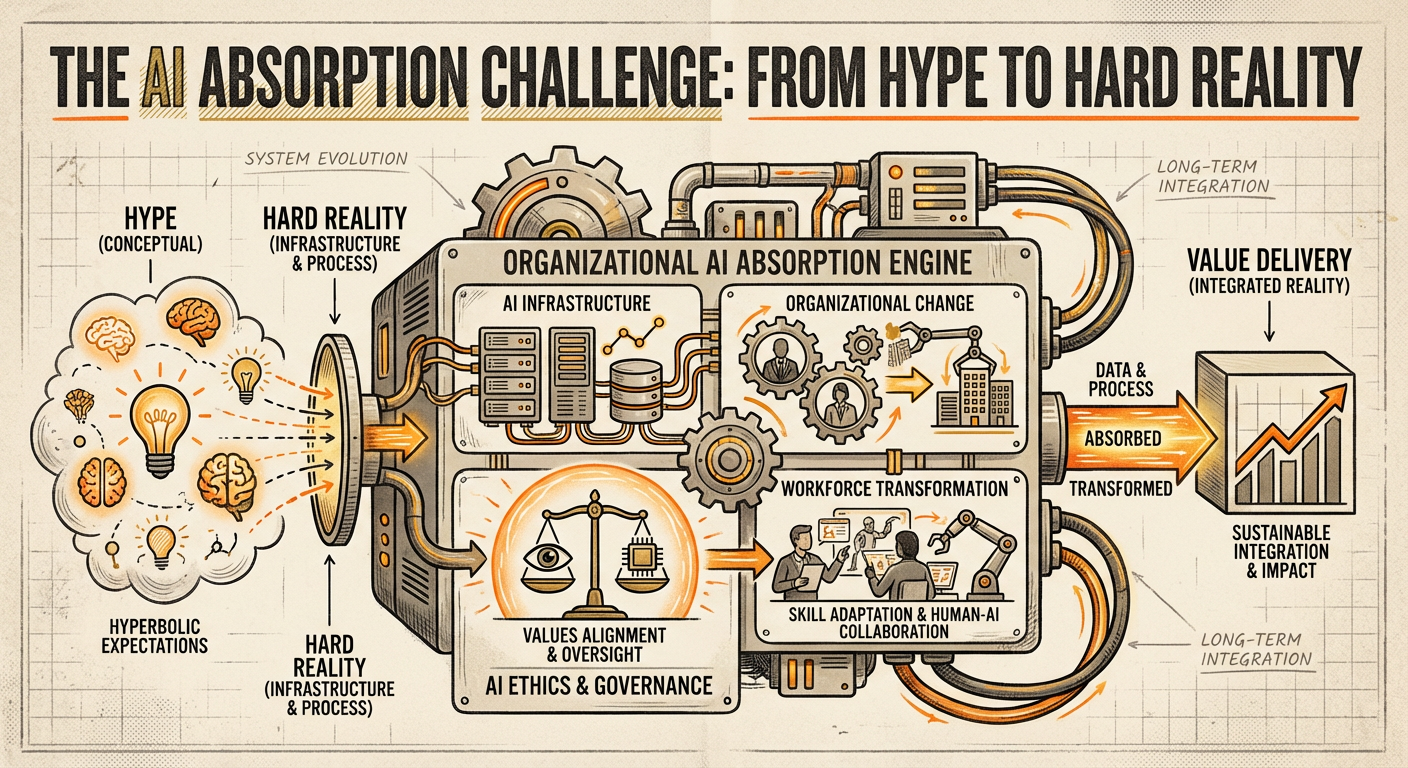

Here's the thing about grand predictions in tech: they always hit the reality of absorption. The AI world is currently brimming with "what if" scenarios, groundbreaking models, and the promise of agentic futures. But this week, the airwaves were loud with a much more grounded question: Can we actually absorb all this AI?

From the sheer physics of energy and compute to the deeply human challenges of talent, organizational structure, and even philosophical debates about banning advanced systems, the consensus bubbling beneath the surface is this: the technology is accelerating, but our capacity to wield it effectively and safely isn't keeping pace. We're moving from a period of "can we build it?" to "can we actually use and integrate this responsibly?" This absorption challenge is where the rubber meets the road for enterprises, governments, and AI's ultimate impact on society. The "why should I care?" shifted subtly to "how do I realistically implement this without breaking everything?".

THE BRIEFING

The Hard Reality of AI Scale: Energy, Compute, and a Skills Gap

We’ve all heard about the insatiable appetite of AI for compute resources. But the conversation is sharpening: it’s not just about chips anymore, it’s about electricity. Azeem Azhar’s Exponential View brought this into stark relief, flagging energy shortages for data centers as a major constraint for widespread AI adoption. This isn't theoretical; it's a very physical limitation that could throttle enterprise AI deployment from 2024-2026.

Paired with this, Practical AI highlighted a growing AI engineer skills gap. Companies now expect mid-level practical engineering skills for entry-level AI roles. The academic world often teaches theoretical knowledge, while industry demands the ability to deploy and maintain scalable AI systems. This gap impacts absorption directly: you can have the electricity and the chips, but if you don't have the builders who can translate research into production, you're stuck.

"The real constraint is electricity. It's the physical limitations of this software and whether existing systems can absorb the demands being put on them." — Azeem Azhar

So What: Your AI roadmap needs to factor in more than just model performance. Consider infrastructural dependencies (energy grids, data center availability) and the tangible skills of your team. The best model doesn't matter if it can't run, or if no one can integrate it effectively.

Voice AI: The Ultimate Interface Hits Key Challenges

ElevenLabs Co-Founder Mati Staniszewski believes voice is the interface of the future—for smartphones, robots, and deeply interactive AI agents. Their work is pushing generative audio models to create human-like speech, real-time translation, and even preserve emotional nuance across languages, radically improving dubbing for media.

But even here, the absorption challenge is clear: it's not just about technical capability. It's about how to seamlessly integrate these sophisticated voice models into useful products, balancing research breakthroughs with practical product development. The vision of AI personal tutors and truly agentic voice experiences is powerful, but getting there requires overcoming technical hurdles (like latency and naturalness) and then figuring out the user experience for a world where our technology talks back.

"Voice is the interface of the future. As you think about the devices around us, whether it's smartphones, whether it's computers, whether it's robots, speech will be one of the key ones." — Mati Staniszewski, ElevenLabs

So What: As AI becomes more multimodal, voice stands out. Consider how advancements in speech UX could redefine your customer interactions, internal tools, or product offerings. But prioritize user experience and ethical guardrails alongside technical capability.

Enterprise AI: Coding is King, But the Gap Widens

The narrative coming from The AI Daily Brief is clear: AI adoption in enterprises is accelerating, with coding emerging as the first killer app. This isn't just about GitHub Copilot; it's about a foundational shift in how many enterprises are leveraging AI for productivity gains. However, this adoption isn't uniform. New data from OpenAI, Menlo Ventures, and EY unequivocally states: AI advantage is compounding, not linear. The gap between AI leaders and laggards is widening because leaders are reinvesting their productivity gains to further entrench their AI capabilities, creating a virtuous cycle.

This means that while 96% of surveyed leaders report AI-driven productivity gains, those falling behind are struggling to make the initial leap from isolated experiments to enterprise-wide transformation. The absorption challenge here is organizational: moving beyond pilot programs to systemic integration.

"Coding is clearly the first killer app… The gap between leaders and laggards is increasing because what constitutes that gap is making the leaders grow faster than the laggers." — The AI Daily Brief

So What: If you’re not already seeing compounding AI benefits, it’s time to aggressively re-evaluate your strategy. Focus on enterprise-wide integration and identify your "killer app" (coding is a strong contender) to avoid falling further behind. The cost of inaction is growing exponentially.

The Organizational Friction: Octopuses vs. Hierarchies

Even with perfect tech, the human element of organizational structure can be the ultimate blocker to AI absorption. HBR IdeaCast's discussion on moving beyond slow, hierarchical organizations was particularly timely. Jana Werner's "octopus organization" metaphor—distributed decision-making, empowered customer-centric teams, and continuous adaptation—is precisely the kind of structure needed to rapidly integrate and leverage fast-moving AI capabilities. Traditional, rigid hierarchies simply cannot keep pace with the iterative, experimental nature of AI deployment.

"The ideal modern organization should be like an octopus with tentacles that work separately but also together with distributed intelligence." — Jana Werner

So What: Your ability to innovate with AI is directly tied to your organizational agility. Reconsider where decisions are made, how teams collaborate, and whether bureaucracy is stifling your AI ambitions. An adaptive structure isn't a "nice-to-have" anymore; it's a prerequisite for AI success.

ON THE RADAR

🔥 Heating Up:

- Real-world hybrid AI stacks: OpenRouter/a16z study shows users combining multiple models and tools, optimizing for specific tasks instead of single-model reliance. (AI Daily Brief)

- Mandated AI Certifications: OpenAI is now offering certification programs, indicating a formalization and professionalization of AI skill validation. (AI Daily Brief)

- Corporate Accountability for Sustainability: Mars CEO ties 40% of his compensation to non-financial metrics (like GHG reduction), pushing real executive skin in the game. (HBR IdeaCast)

👀 Worth Watching:

- GenAI.mil: The US military’s new GenAI platform is a significant move for national security implications and large-scale, sensitive AI deployment. (AI Daily Brief)

- The 2026 AI Boom-versus-Bubble Debate: Expect mid-2026 to be a critical inflection point as enterprises evaluate initial AI investments and real ROI. (Exponential View)

⚠️ Proceed With Caution:

- "Supintelligence" Bans: The debate between Max Tegmark and Dean Ball highlights the deep divisions and practical challenges of regulating something so powerful yet ill-defined. A ban could stifle innovation or create a black market. (Cognitive Revolution)

- Undercounting AI Architects: Time Magazine's "Architects of AI" list missed crucial players like China, capital allocators, and enterprise operators – overlooking real centers of influence and power. (AI Daily Brief)

THE CONTRARIAN CORNER

While many are focused on the rapid ascent of AI, particularly "superintelligence," Liron Shapira brought Max Tegmark and Dean Ball together for a "Doom Debate" that highlights a deep philosophical and practical chasm. Tegmark argues for a ban or a regulatory pause on superintelligence development until there's scientific consensus on safe development (drawing parallels to nuclear energy). His concern is profound: that artificial superintelligence has vastly more downside potential than even hydrogen bombs. Dean Ball, however, counters that the concept of superintelligence is too nebulous and hypothetical to effectively legislate. Banning something so undefined could inadvertently hinder beneficial AI advancements, create monopolies, and fail to address the global cooperation needed for any effective regulation. This isn't just a moral debate; it's about the practical difficulty of defining, let alone banning, a future super-technology without understanding its exact form or pathway.

THE BOTTOM LINE

The AI revolution is past its initial "wow" phase and deep into the challenging, often messy, reality of implementation. The real strategic advantage now lies not just in acquiring the technology, but in fundamentally transforming your organization, battling infrastructural constraints, and critically, developing the talent to truly absorb and wield this power effectively.

📚 APPENDIX: EPISODE COVERAGE

1. Practical AI: "The AI engineer skills gap"

Guests: Daniel McCaffrey (Host), Chris Benson (Host), Matt Asay (Guest, VP of Data & AI at MongoDB)

Runtime: 59:00 | Vibe: Sobering call to action for AI education

Key Signals:

- Industry vs. Academia Disconnect: There's a significant and growing gap between what AI/Data Science curricula teach (theory) and what industry demands (practical deployment and maintenance of scalable AI systems). Companies are now expecting mid-level engineering skills for entry-level positions.

- AI's Impact on Junior Roles: AI is rapidly automating repeatable tasks traditionally assigned to junior roles, forcing a reshuffle of required skills across the board. The focus is shifting from theoretical knowledge to demonstrable ability to build and deploy.

- The New Hiring Currency: The "new currency" in AI hiring is the ability to build, deploy, and maintain scalable AI systems. This emphasizes practical, hands-on experience over purely academic achievements for those looking to enter or advance in the field.

"It's not about what do you know about from the textbook anymore, it's about what can you build, can you deploy and maintain a real scalable AI system? It's kind of like that's the new currency of hiri..."

2. HBR IdeaCast: "Future of Business: Mars CEO on How Business Can Be a Force for Good"

Guests: Alison Beard (Host), Curt Nickisch (Host), Poul Weihrauch (Guest, CEO of Mars)

Runtime: 30:00 | Vibe: Inspiring deep dive into corporate sustainability

Key Signals:

- Integrated Sustainability Measures: Mars CEO Poul Weihrauch explains their "compass" framework, integrating growth, P&L, responsible business practices, and social/environmental impact into shareholder objectives. This moves beyond traditional CSR to embedding sustainability at the core.

- Executive Compensation Alignment: 40% of the CEO's compensation is tied to non-financial metrics, including greenhouse gas reduction, demonstrating genuine executive-level accountability for sustainability goals. This directly links long-term strategic intent to personal incentives.

- Resilient Supply Chains: Mars prioritizes building resilient supply chains, especially for commodities like cocoa and coffee, through long-term partnerships and direct sourcing. This protects against climate risks while supporting local producers and ensuring quality.

"40% of my compensation is on non financial metrics. So if I have to tease back, I would say to a public listed CEO, how do you feel about having 40% of your compensation on greenhouse gases? That's ch..."

3. The AI Daily Brief: Artificial Intelligence News and Analysis: "What People Are Actually Using AI For Right Now"

Guests: Stephen O'Grady (Host)

Runtime: 15:00 | Vibe: Data-driven snapshot of current AI applications

Key Signals:

- Dominant AI Use Cases: A study by OpenRouter and a16z found that programming (coding assistants, debugging) and roleplay (interactive storytelling, character creation) have become the most dominant use cases for AI, shifting from earlier general query patterns.

- Shift to Hybrid AI Stacks: Users are increasingly building "hybrid AI stacks," utilizing different models for specific tasks (e.g., one model for code generation, another for creative writing) rather than relying on a single, monolithic AI. This emphasizes orchestration and targeted model selection.

- Focus on Reliability and Customization: While new features grab headlines, the current industry push is towards improving AI chatbot speed, reliability, and customizability. This reflects a maturation from novelty to practical, dependable tools for users.

"The balance between reasoning versus non reasoning tokens completely shifted over the course of the year. The dominant use case by far has become programming. The other use case that dominates is role..."

4. No Priors: Artificial Intelligence | Technology | Startups: "The Future of Voice AI: Agents, Dubbing, and Real-Time Translation with ElevenLabs Co-Founder Mati Staniszewski"

Guests: David Hsu (Host), Mati Staniszewski (Guest, Co-founder of ElevenLabs)

Runtime: 59:00 | Vibe: Visionary exploration of sound and AI

Key Signals:

- Voice as the Ultimate Interface: Staniszewski posits that voice will become the primary interface for many technological interactions, ranging from smartphones and computers to robotics, due to its natural and intuitive nature.

- Foundational Audio Models: ElevenLabs is building foundational models for audio, similar to how large language models function for text. This enables human-like speech generation, real-time translation, and preserving emotional nuance across languages, radically improving dubbing.

- AI Personal Assistants/Agents: The long-term vision includes highly interactive AI personal assistants and agents that can adapt to individual users, offer personalized tutoring, and manage complex tasks through natural voice commands, becoming deeply integrated into daily life.

"Voice is the interface of the future. As you think about the devices around us, whether it's smartphones, whether it's computers, whether it's robots, speech will be one of the key ones."

5. Azeem Azhar's Exponential View: "What it will take for AI to scale (energy, compute, talent)"

Guests: Azeem Azhar (Host)

Runtime: 25:00 | Vibe: Realistic assessment of AI's physical constraints

Key Signals:

- Electricity as a Bottleneck: Beyond compute power, the physical constraint of electricity supply for data centers is emerging as a critical factor influencing AI's widespread adoption. The demand for energy by new AI infrastructure risks outpacing current capacity.

- Absorption Over Innovation: The real challenge for AI over the next two years isn't just technological advancement, but the economy's ability to absorb and integrate these new capabilities. This includes economic viability and societal acceptance.

- Mid-2026 as a Turning Point: The mid-point of 2026 is projected as a key period where the enterprise world will be evaluating the ROI and real-world results of their AI investments, determining whether current enthusiasm translates into sustained value.

"The real constraint is electricity. It's the physical limitations of this software and whether existing systems can absorb the demands being put on them."

6. AI Breakdown: "Episode 1,001: The Big Ideas That Stood Out in AI"

Guests: Nathaniel Whittemore (Host)

Runtime: 15:00 | Vibe: Reflective celebration of community and learning

Key Signals:

- Consistency Beats Talent: In the rapidly evolving AI space, consistent engagement and continuous learning are more valuable than sporadic bursts of talent. This applies to both creators and learners in the AI community.

- Human Element in AI: AI's meaning and impact are ultimately derived from the people who use it. The human element, including community, perspective, and application, remains central to understanding AI's relevance.

- Growth Through Iteration: The podcast's milestone reflects the power of iterative growth and adaptation in a fast-changing domain. Over a thousand episodes represent an ongoing dialogue and learning journey about AI.

"Consistency beats talent."

7. The AI Daily Brief: Artificial Intelligence News and Analysis: "The Architects of AI That TIME Missed"

Guests: Stephen O'Grady (Host)

Runtime: 14:00 | Vibe: Critical look at AI power structures

Key Signals:

- Overlooked Global Players: TIME's "Architects of AI" list was criticized for largely overlooking significant global players, particularly Chinese AI companies (Deepseek, Alibaba, ByteDance) and the influence of the Chinese Communist Party (CCP) and its policies on AI development.

- Capital Allocators and Geopolitics: The episode highlights the crucial roles of major capital allocators (venture capitalists, sovereign wealth funds) and entire nation-states (e.g., Middle East's investment in AI) in shaping the AI landscape, extending beyond just tech companies.

- Enterprise Operators and Cultural Translators: Enterprise operators who deploy AI and "cultural translators" who bridge the gap between technical AI and real-world impact are critical but often unacknowledged architects of AI's widespread influence.

"If we are trying to have a true articulation of the architects of AI, you have to include the leadership at companies like Deepseek, Alibaba, ByteDance, and you have to view the CCP and their policies..."

8. The AI Daily Brief: Artificial Intelligence News and Analysis: "Why AI Advantage Compounds"

Guests: Stephen O'Grady (Host)

Runtime: 12:00 | Vibe: Urgent warning for AI laggards

Key Signals:

- Compounding AI Advantage: New data from OpenAI, Menlo Ventures, and EY indicates that AI advantage is compounding, not linear. Leading organizations are using AI more intensively and reinvesting the resulting productivity gains to further amplify their capabilities.

- Widening Gap Between Leaders and Laggards: The compounding nature of AI benefits is creating an increasing disparity between AI-forward companies and those lagging behind. Leaders grow faster, widening the gap.

- High Productivity Gains for Leaders: 96% of surveyed AI leaders are reporting significant AI-driven productivity gains, demonstrating the tangible business impact for those who successfully integrate AI at scale.

"AI advantage is proving to be compounding, not linear."

9. "The Cognitive Revolution" | AI Builders, Researchers, and Live Player Analysis: "Supintelligence: To Ban or Not to Ban? Max Tegmark & Dean Ball join Liron Shapira on Doom Debates"

Guests: Liron Shapira (Host), Max Tegmark (Guest, MIT Professor, President of Future of Life Institute), Dean Ball (Guest, independent AI researcher)

Runtime: 1:30:00 | Vibe: Intense philosophical and practical debate on AI regulation

Key Signals:

- Arguments for Banning Superintelligence: Max Tegmark advocates for a ban or extreme regulation on superintelligence development until there's scientific consensus and public support for safe deployment, citing existential risk far exceeding that of nuclear weapons.

- Arguments Against Banning Superintelligence: Dean Ball argues against a ban, stating that the concept of "superintelligence" is too nebulous and undefined for effective regulation. He also expresses concern that a ban could hinder beneficial AI advancements, create monopolies, or fail to achieve international cooperation.

- Regulation Challenges: The debate highlights the immense practical and ethical challenges of regulating an undefined future technology. It questions the feasibility of an international ban and the potential for unintended consequences like black markets or stifled innovation.

"I would argue that artificial superintelligence is vastly more powerful in terms of the downside than hydrogen bombs would ever be."

10. The AI Daily Brief: Artificial Intelligence News and Analysis: "The State of Enterprise AI"

Guests: Stephen O'Grady (Host)

Runtime: 13:00 | Vibe: Snapshot of enterprise AI adoption trends

Key Signals:

- Coding as the "Killer App" for Enterprise AI: For many enterprises, AI's most impactful initial use case is in coding assistants and related developer tools, significantly boosting productivity and efficiency within software development.

- Accelerating Enterprise Adoption and Widening Divide: AI adoption in enterprises is rapidly increasing, but this acceleration is further separating AI leaders from laggards. Leaders are integrating AI across their operations, while others struggle with initial steps.

- Formalizing AI Skills and Governance: Initiatives like OpenAI’s AI certification courses and organizations like the Agentic AI Foundation signify a growing need for standardized AI skills, governance frameworks, and ethical guidelines within enterprises.

"Coding is clearly the first killer app."

11. HBR IdeaCast: "Moving Beyond the Slow, Hierarchical Organization"

Guests: Alison Beard (Host), Curt Nickisch (Host), Jana Werner (Guest, organizational design expert and author)

Runtime: 28:00 | Vibe: Practical guide to modern organizational structures

Key Signals:

- The "Octopus Organization" Metaphor: Jana Werner introduces the "octopus organization" as an ideal model for modern enterprises, featuring distributed decision-making, empowered teams (tentacles), and decentralized intelligence that can work both separately and collaboratively.

- Agility and Customer-Centricity: The core tenets of this organizational model are agility, continuous adaptation, and an obsessive focus on customer needs. This contrasts sharply with traditional, rigid hierarchies that struggle to innovate and respond quickly.

- Breaking Down Bureaucracy: The episode emphasizes that transforming into an agile, adaptable organization requires actively breaking down bureaucratic hurdles and empowering teams closest to the customer and the work.

"The ideal modern organization should be like an octopus with tentacles that work separately but also together with distributed intelligence."