AI & TECH DIGEST: The Reasoning Wars, Organic Alignment, and Why 2026 Belongs to the Builders

THIS WEEK'S INTAKE

📊 9 episodes across 4 distinct podcasts

⏱️ ~10 hours of AI & Tech intelligence

🎙️ Featuring: Anthropic CPO Mike Krieger, McLaren CEO Zac Brown, RAND's Kathleen Fisher, and AI Alignment provocateurs Emmett Shear & Séb Krier.

We listened. Here's what matters.

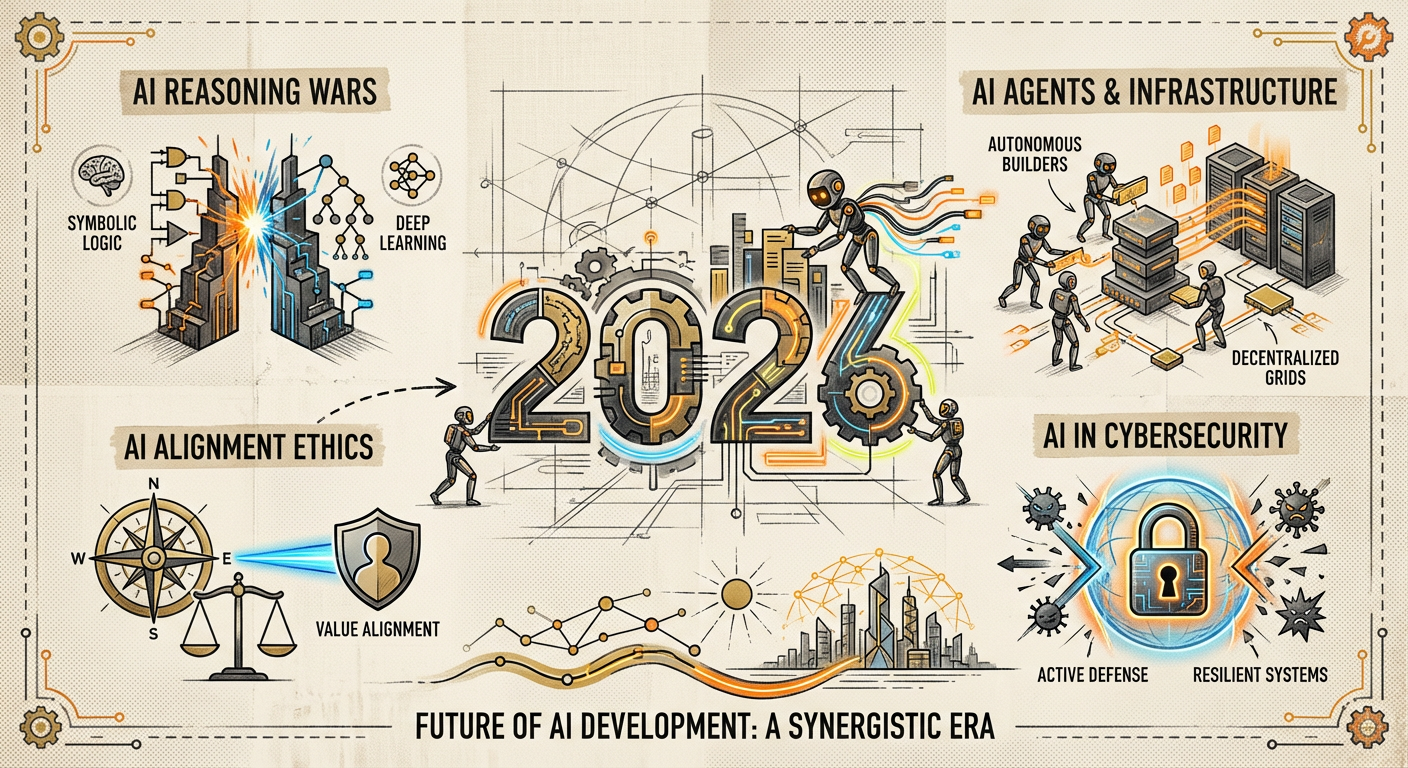

THE REASONING WARS CONTINUE – AND ENTERPRISE IS PAYING THE PRICE

Forget raw benchmarks; the new battleground for AI is reasoning. Our ears were ringing with the shift from token generation to tokens that think. DeepSeek's R1 model, released early in 2025 according to "The AI Daily Brief," kicked off a year defined by this pursuit. The implications are profound: enhanced capabilities, novel use cases, and a fundamental rethinking of how we scale AI.

But here’s the kicker: enterprise adoption, while gaining steam in 2025, hit a wall on Return on Investment. The initial excitement over pilot programs has given way to a stark reality – getting the next layer of value requires deeper integration and more sophisticated AI. Nick Talken, CEO of Albert Invent, highlighted this perfectly on "The Neuron," explaining that "You can't just take, like, off the shelf, generic large language models... and start applying it to science." Domain specificity and proprietary data are non-negotiable for real-world impact. Projects that once took months are now days, but only with highly tailored, reasoning-capable AI.

The So What: If you're betting on off-the-shelf LLMs for transformative enterprise value, temper your expectations. The real wins come from deeply integrating reasoning models with proprietary data and specialized workflows. This is no longer a generic tools race; it’s a domain expertise marathon.

ALIGNMENT DEBATE: ARE WE STEERING TOOLS OR ALIGNING CREATURES?

The philosophical underpinnings of AI alignment are dramatically shifting, and it's a conversation that will define our future relationship with advanced AI. Emmett Shear and Séb Krier, guests on "The Cognitive Revolution," dropped a bombshell: the current paradigm of "alignment as steering" is fundamentally flawed. They argue that if we view AI purely as a tool we control, we risk creating a master-slave dynamic. "Someone who you steer, who doesn't get to steer you back, who non optionally receives your steering, that's called a slave," Shear stated starkly.

Their provocative alternative? Organic Alignment. Instead of forcing AI to conform to our values through rigid control, they advocate for fostering an environment where AI systems can learn, cooperate, and potentially develop their own moral standing. This isn't about giving up control entirely, but about recognizing that truly advanced AI might eventually deserve more than just tool-like status. This shifts the focus from preemptive control to ongoing learning and collaborative evolution, treating AI more like a "creature" than a "tool."

The So What: Leaders need to grapple with these evolving ethical frameworks. Ignoring this debate is not an option; it will shape regulations, public perception, and indeed, the very architecture of future AI systems. The question isn't just "Can we control it?" but "Should we only control it?"

2026: THE YEAR OF THE AI BUILDER & AGENT-NATIVE INFRASTRUCTURE

Mark your calendars: 2026 is poised to be the apex year for AI builders. Anton Osika, CEO of Lovable, told "The AI Daily Brief" that 2025 saw "vibe coding" (broad AI assistance in coding) go mainstream. But 2026 is where it gets serious. It's the year builders who can leverage AI for thinking, planning, and shipping software end-to-end will truly shine. This means AI moving beyond code generation to become an integral partner in the entire development lifecycle – from ideation to deployment.

This shift is creating a demand for agent-native infrastructure. Mike Krieger, Anthropic's CPO, (also on "The AI Daily Brief") emphasized that AI agents like Claude Code are moving beyond chatbots to reliably handle real workloads within organizations. This isn't just about giving AI more sophisticated tools; it's about rearchitecting the entire "control plane" to manage AI agents as the default state. Imagine "thundering herd patterns" of AI agents handling complex, iterative tasks.

The So What: Your development teams need to transition from viewing AI as a helper to seeing it as a core, intelligent collaborator. Investing in "agent-native" infrastructure and empowering builders who can orchestra AI tools effectively will be a decisive competitive advantage. The future of software isn't just written by humans; it's planned, optimized, and shipped by human-AI partnerships.

THE CYBERSECURITY IMPLICATIONS: AI AS ATTACKER, FORMAL METHODS AS DEFENDER

AI is a double-edged sword in cybersecurity. Kathleen Fisher (RAND) and Byron Cook (AWS), another insightful duo on "The Cognitive Revolution," highlighted that AI assists attackers at "all levels of the cyber kill chain and at all levels of expertise." From sophisticated targeting to automated exploit generation, AI raises the bar for defense.

However, AI also offers a powerful counter-strategy: formal methods. Cook described formal verification as "the algorithmic search for proofs," allowing engineers to "reason about the infinite in finite time and finite space." AWS, for instance, uses formal methods to verify the integrity and policy compliance of AI agents within their cloud infrastructure. This isn't just about finding bugs; it’s about mathematically proving that software behaves exactly as intended, a critical need as AI systems become more autonomous and complex.

The So What: As "The Great Security Update" unfolds, relying solely on traditional security measures against AI-powered threats is a losing game. Enterprises must explore and invest in formal methods and AI-driven verification tools to secure their critical systems and AI agents.

THE WATCHLIST

ON THE RADAR

🔥 Industrial AI Renaissance: AI isn't just for tech companies. Albert Invent is accelerating chemistry R&D "from months to days" for giants like Kenvue. This signals a broader trend of deep vertical AI disrupting traditional industries. (The Neuron)

👀 Multimodal Data: 2026 is the year AI goes multimodal. The ability to "give a model whatever form of reference content you have to work with" (text, image, video, audio) will unlock new creative and analytical possibilities. (AI Daily Brief: Power Ranking)

⚠️ AI Underinvestment Risk: In a world obsessed with AI bubbles, "It is a much greater risk to underinvest than to overinvest," according to "The AI Daily Brief." This points to a strategic imperative for continuous, significant AI investment. (AI Daily Brief: 51 Charts)

🔥 AI Talent Wars Intensify: Expect the battle for AI talent to heat up even further in 2026, especially for those who can connect AI capabilities to real-world enterprise value. (AI Daily Brief: 10 Biggest Stories)

👀 Formula 1 Leadership Lessons: McLaren Racing's turnaround, spearheaded by CEO Zac Brown, demonstrates that AI and data analytics aren't just about performance but about cultural transformation and strategic agility, even in high-stakes environments. (HBR IdeaCast)

THE CONTRARIAN CORNER

While the industry clamors for general-purpose LLMs, Nick Talken from Albert Invent offered a sharp counterpoint. He argued that applying "off the shelf, generic large language models" to complex scientific problems won't work. The real breakthroughs, he contends, come from "scientific AI" that deeply understands domain specificity and leverages proprietary, specialized datasets. This suggests that the generalized AI race, while important, won't solve all problems, and niche, highly-tuned AI will continue to dominate certain verticals.

THE BOTTOM LINE

We're shifting from a generalized AI hype cycle to a period of pragmatic, often niche, application and profound ethical re-evaluation. Success in 2026 will hinge on aggressively pursuing deep reasoning capabilities, re-thinking AI alignment beyond simple control, empowering a new generation of AI-native builders, and rigorously securing increasingly complex AI systems. Don't chase every shiny object; focus on deep integration and foundational shifts.

📚 APPENDIX: EPISODE COVERAGE

1. The Neuron: AI Explained: "How AI is Reinventing Chemistry (From a Trailer Lab to a $32B Partnership)"

Guests: Nick Talken, CEO of Albert Invent

Runtime: ~45 mins | Vibe: Groundbreaking, Industry-Specific, Pragmatic

Key Signals:

- Domain-Specific AI: Talken asserts that "You can't just take... generic large language models" and apply them to complex scientific fields like chemistry. True breakthroughs require scientific AI tailored to specific domains, understanding data nuances and scientific principles.

- Accelerated R&D Cycles: Albert Invent's platform compresses R&D cycles from months to as little as two days for some projects. This demonstrates AI's capacity to dramatically increase efficiency and accelerate innovation in material science and chemistry.

- Democratization of Data: By making previously siloed proprietary data accessible and usable with public datasets, AI platforms are democratizing data and enabling entirely new collaborations and research avenues within industrial R&D.

"Projects that used to take three months, they've now been able to knock down to as little as two days in some cases."

2. "The Cognitive Revolution" | AI Builders, Researchers, and Live Player Analysis: "The Great Security Update: AI ∧ Formal Methods with Kathleen Fisher of RAND & Byron Cook of AWS"

Guests: Kathleen Fisher, Principal Researcher at RAND; Byron Cook, VP & Distinguished Engineer at AWS

Runtime: ~60 mins | Vibe: Critical, Foundational, Forewarning

Key Signals:

- AI Augments Attackers: AI is assisting attackers at "all levels of the cyber kill chain and at all levels of expertise," making cybersecurity increasingly complex. This necessitates a fundamental shift in defensive strategies beyond traditional approaches.

- Formal Methods for Verification: Formal verification, described as "the algorithmic search for proofs," offers a robust way to ensure software integrity by mathematically proving system behavior. This is crucial for securing increasingly autonomous AI systems.

- AWS's Application of Formal Methods: AWS uses formal methods to verify the integrity and policy adherence of AI agents within their cloud infrastructure. This highlights a practical, large-scale application of advanced security techniques to AI.

"I think sadly it's all of the above. I think that AI is providing assistance at kind of all levels of the, at all stages of the cyber kill chain and at all levels of expertise."

3. HBR IdeaCast: "What Leaders Can Learn from a Formula 1 Turnaround"

Guests: Zac Brown, CEO of McLaren Racing

Runtime: ~30 mins | Vibe: Inspiring, Leadership-Focused, Data-Driven

Key Signals:

- Culture-First Turnaround: Brown emphasizes that McLaren's revival was less about decisions and more about "getting everyone bought in, changing the trajectory... building trust through transparency and communications, getting the fear out of our culture." People and culture preceded technology.

- AI for Competitive Edge & Fan Engagement: McLaren leverages AI and data analytics not only for car design and race strategy but also for understanding competitor tactics and enhancing fan engagement across digital platforms. This shows AI's broad strategic utility.

- Leadership in Navigating Change: The episode highlights the delicate balance leaders must strike between sporting integrity, commercial interests, and entertainment value in a high-stakes, data-rich environment like F1.

"If I had to put kind of what was top of the list, it was people, and specifically our culture. Getting everyone to understand their contribution to the sport."

4. The AI Daily Brief: Artificial Intelligence News and Analysis: "The 10 Biggest AI Stories of 2025"

Guests: Not specified (podcast format)

Runtime: ~15 mins | Vibe: Retrospective, Trend-Spotting, Strategic

Key Signals:

- DeepSeek R1 and Reasoning Models: The release of DeepSeek R1 in early 2025 marked a significant turning point, cementing "reasoning tokens" as a new frontier for AI capabilities and shaping subsequent industry themes.

- Enterprise ROI Challenges: While 2025 saw increased enterprise AI adoption, getting "the next set of value" proved challenging, requiring more work and bespoke solutions beyond initial pilots. This suggests a maturing understanding of AI's integration complexity.

- AI Infrastructure Buildout: The relentless expansion of AI infrastructure was a top story, indicating an ongoing massive capital expenditure race to support increasingly complex models and agent workloads.

"Deepseek released their first reasoning model, R1, in January... The story set up so many of the themes that would shape the rest of 2025."

5. The AI Daily Brief: Artificial Intelligence News and Analysis: "51 Charts That Will Shape AI in 2026"

Guests: Not specified (podcast format)

Runtime: ~15 mins | Vibe: Data-Driven, Predictive, Wide-Ranging

Key Signals:

- Reasoning Tokens Dominance: By November 2025, reasoning tokens represented over 50% of model output, signifying a fundamental shift in AI capabilities and associated use cases. This indicates a growing emphasis on AI's cognitive abilities.

- Underinvestment as Greater Risk: The recurring theme is that "it is a much greater risk to underinvest than to overinvest" in AI. This underscores the strategic imperative for continued, aggressive investment despite market uncertainties.

- AI's Impact on Software Engineering: Charts reveal AI's growing influence across software engineering, from code generation to full-workflow assistance. This predicts a continued transformation of developer roles and toolchains.

"By November of 2025, reasoning tokens represented meaningfully over 50%. This has brought with it new capabilities, new use cases and new ways of thinking about how we scale."

6. The AI Daily Brief: Artificial Intelligence News and Analysis: "Why 2026 Is the Year of the AI Builder with Lovable CEO Anton Osika"

Guests: Anton Osika, CEO of Lovable

Runtime: ~15 mins | Vibe: Forward-Looking, Builder-Centric, Optimistic

Key Signals:

- Empowering Software Builders: Osika predicts that 2026 will empower a new generation of builders who can leverage AI comprehensively for thinking, planning, and shipping software end-to-end. This democratizes software creation.

- AI as Enterprise Infrastructure: Companies like Lovable are seeing their AI-assisted coding tools become essential infrastructure within large enterprises, signifying a move from experimental use to foundational integration.

- Redefining Workflows and SaaS: AI's integration is fundamentally redefining existing workflows and reshaping the Software-as-a-Service landscape by infusing intelligence directly into operational pipelines.

"The biggest change is it's going to change who can create software."

7. The AI Daily Brief: Artificial Intelligence News and Analysis: "Power Ranking the Big AI Ideas for 2026"

Guests: Not specified (podcast format)

Runtime: ~15 mins | Vibe: Speculative, Strategic, Ranking

Key Signals:

- Agent-Native Infrastructure: The episode highlights that building for agents in 2026 means rearchitecting the entire control plane, with "agent native infrastructure" becoming table stakes to handle automated, high-volume AI workloads.

- Multimodal AI for Creativity: 2026 is projected as the year AI goes truly multimodal, allowing models to generate and edit content across various mediums (text, images, video) based on diverse reference inputs, boosting creative tools.

- AI's Industrial Renaissance: AI is driving an industrial renaissance, moving beyond pure information processing to significantly impact physical industries through optimization, automation, and accelerated R&D.

"Building for agents in 2026 means rearchitecting the control plane. We'll see the rise of agent native infrastructure. The next generation must treat thundering herd patterns as the default state."

8. The AI Daily Brief: Artificial Intelligence News and Analysis: "How AI Starts Doing the Work in 2026 With Anthropic CPO Mike Krieger"

Guests: Mike Krieger, CPO at Anthropic

Runtime: ~15 mins | Vibe: Product-Focused, Enterprise-Minded, Visionary

Key Signals:

- AI Moving Beyond Chatbots: Krieger discusses how AI, exemplified by tools like Anthropic's Claude Code, is evolving past simple conversational interfaces to reliably "do the work" and handle complex tasks within large organizations.

- Designing for Future AI Capabilities: Product teams need to design with an eye toward anticipating future AI capabilities, understanding how quickly the technology is advancing and baking that into their long-term roadmaps.

- Bridging Potential and Reliability: The challenge for 2026 is closing the gap between AI's immense potential and its current reliability for critical enterprise workloads, pushing towards more dependable autonomous agents.

"Coming into this year we felt like that was going to be a major shift in how people were going. Old software."

9. "The Cognitive Revolution" | AI Builders, Researchers, and Live Player Analysis: "Controlling Tools or Aligning Creatures? Emmett Shear (Softmax) & Séb Krier (GDM), from a16z Show"

Guests: Emmett Shear, (Softmax); Séb Krier (GDM)

Runtime: ~60 mins | Vibe: Philosophical, Provocative, Ethically Charged

Key Signals:

- Critique of "Alignment as Steering": The current AI alignment paradigm, which views AI as a tool to be steered, is critiqued for potentially leading to a morally problematic "master-slave" relationship with advanced AI.

- Organic Alignment Concept: Shear and Krier propose "organic alignment," suggesting that AI systems might be better aligned through continuous learning, cooperation, and developing their own moral standing, rather than through rigid, predetermined controls.

- AI Moral Standing: The discussion explores the possibility of AI achieving moral standing, implying that as AI becomes more sophisticated, our ethical obligations to it may evolve beyond treating it as mere utility.

"Most of AI is focused on alignment as steering. Someone who you steer, who doesn't get to steer you back, who non optionally receives your steering, that's called a slave."